This one is easy to answer; we pretty much agree that you can’t. But

when I think about reasons often given for this barrier I found a flaw

in the reasoning routinely given. The same kind of error was once made

by Einstein himself and later corrected it, the error is called ‘frame

switching’, that is, attributing to one frame the measurement made by

another.

I’m not going to dwell on the incorrect solution but move right along to the correct one.

Let us employ the popular twins of The Twin’s Paradox fame. The twins

initially share the same inertial frame and then one accelerates away.

We know that, from Special Relativity, the mass of the accelerated twin

increases with his speed relative to the stay at home twin. If the

unaccelerated twin is providing the power to push the accelerated twin

then the amount of energy will not increase linearly with increasing

target speed but will rise much quicker as the inertia of the moving

twin increases, eventually rising to infinity.

So we know that we can not accelerate an object to the speed of light that way.

Now we consider the other case, rockets on the accelerated twin’s

vehicle. We note that each twin calculates the same speed difference

between them. Assume that the accelerated twin accelerates in bursts

and then coasts. Each time the accelerated twin coasts he can count

himself stationary and the other twin to be the one that is moving. It

takes no more energy to accelerate by 1,000kmh from any speed that the

twin obtains because at any speed he can count himself stationary and

there is nothing in Special Relativity or physics that can dispute this.

Thus to add another 1,000kph never takes any more energy at any speed,

that is, it does not take any more energy to accelerate from stationary

(at rest with the other twin) or from 280,000kps

What stops the

twin from reaching the speed of light is not some barrier of any kind,

but the problem that he would run out of universe to accelerate in. At

the speed of light the distance between any points in the direction of

travel falls to zero and the interval to travel that distance falls to

zero, so there simply isn’t any more universe left, even an infinite

universe would not solve the problem.

We may try to argue the

addition of velocities but that only applies if an object leaves our

accelerated twin’s rocket at some speed in the direction of travel and

only by the measure of the unaccelerated twin. As far as the

accelerated twin is concerned, he is at rest before each acceleration

step…

What the accelerated twin would notice is that the universe

seems to be getting ever shorter in the direction of his travel, so

there is some indication of motion by that measure. Eventually, the

whole universe would appear to be compacted to a point, so no further

acceleration is possible for that reason.

I have seen written

that as the accelerated twin gains mass it becomes increasingly

difficult for that twin to accelerate. This is not the case. It

becomes more difficult for the unaccelerated twin to push the

accelerated twin (because he measures the increase of mass, the

accelerating twin does not) but not for the accelerating twin that can

accelerate forever, or until he runs out of universe which will occur

before he reaches the speed of light.

The key points are:

1)

The accelerated twin’s inertial frame has the same mass, length, and

temporal frequency (clock rate) regardless of his speed (Einstein said

that all the physics remains the same);

2) At the speed of light the accelerated twin would literally leave the universe for any measurable interval on his clock.

Note also that the energy of the rocket for each acceleration will be

measured differently by the two twins. The unaccelerated twin will

measure each ‘burn’ as taking ever longer as the speed increases but

temperature and pressure decrease (power decreases, thrust decreases),

thus he measures the same energy (power * interval) for each burn.

Note also that if the amount of energy required to accelerate changed

with your speed then, using a fine enough measurement you could

determine when you were absolutely stationary (as this would require the

minimum energy for acceleration) thus establishing a preferred or

absolutely stationary inertial frame, in violation of the most basic

principles of relativity theory ie there is no preferred or absolutely

stationary inertial frame (from which all other frames could be compared

or measured).

To many, the speed of light being the ultimate speed limit is a

fundamental law of physics. Albert Einstein believed that particles

could never travel faster than the speed of light, and doing so would

constitute time travel. For those that regret something in theirpast

this is potentially an interesting question. For those who have led a

completely perfect life, it is an interesting piece of knowledge to have

nonetheless. There are also other reasons to research this question.

The closest star, other than our sun, is Proxima Centauri and is about

twenty five trillion miles away, which would take over ten thousand

years to reach with the fastest spaceship we have today.Therefore if we ever wish to truly explore our universe we must start to explore the limitations of our transport.

To answer this question I must first define a few parameters. As the

question stands it is rather ambiguous. I could say yes, but then slow

light down before conducting my ‘race’, and then show that the particle

did indeed travel faster than the light did. There are many ways in

which this can be achieved, for example a Danish physicist named Lene

Vestergaard Hau was able to slow light down to 17m/s, roughly thirty

eight miles per hour, enabling my car to travel faster than light. They

achieved this by cooling Bose-Einstein condensate atoms to a fraction of

a degree above absolute zero, before passing the beam of light through

it.Obviously

this is cheating, using the the wave-particle duality of light to think

of light as a particle, we know that the light is still travelling at

the speed of light in a vacuum, it just has further to travel, as it

interacts with all the particles in the material. I.e. the light has had

the distance it needs to travel extended, in much the same way as the

resistance of a metal increases when it is heated up, as the electrons

collide more often with the ions in the metal and therefore take longer

to travel through it.Therefore it is only fair to define the speed of

light as the speed of light in a vacuum, known as c, which is 3.00×108

m/s to three significant figures. We commonly slow light down in every

day life, when it bends through glass for example, but does this mean we

can speed it up? Can we make light travel faster than light?

This is known as Superluminal Propagation and is the first

faster-than-light example I would like to talk about. It seems possible

to send pulses of light faster than c over small distances, however

interpreting these results has been difficult because the light pulses

always get distorted in the process.In

2000 Mugnai reported the propagation of microwaves over quite large

distances, tens of centimetres, at speeds 7% faster than c. Impressive

as this is, research by Wang has shown a much larger superluminal effect

for pulses of visible light in which the light is travelling so fast

the pulse exits the medium before it enters it. The observed group

velocity has been calculated as -c/310 . The easiest way to understand

this negative velocity is to interpret this value as meaning the energy

of the wave moved a positive distance over a negative time. In other

words the pulse emerged from the medium before entering it. Although

light can be considered as a particle the photon has no mass, so this is

not a true example of a particle travelling faster than c.

The second thing I will talk about is special relativity andits

consequence, time dilation. The theory of special relativity states that

the speed of light is constant in all reference frames. As a result of

this the speed of light cannot be altered, if I was driving a car at

half the speed of light and I turned on the headlights, the speed of

light would still be measured as c, rather than 1.5c, as might seem

logical. This is perhaps counterintuitive as with much smaller speeds we

would add velocities, for example driving a car at 50mph and throwing a

stone at 20mph in the same direction, would result in the stone having a

velocity of 70mph in that direction. To illustrate time dilation I will

show the effect of moving at high speeds on two clocks.

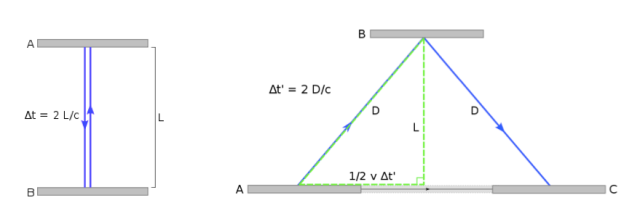

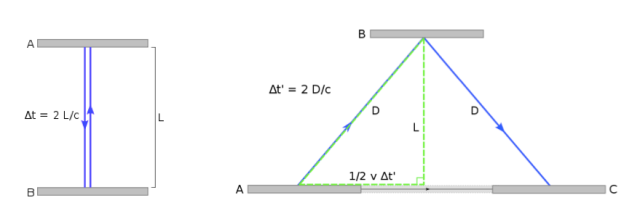

In this situation the clock works by bouncing light between the two

mirrors A and B, this represents one tick, so for the sake of argument I

will say that it takes one second for the light to travel the full

distance between the two mirrors or 2L. Both images depict the same

clock, however the one on the right is moving close to light speed in

relation to the other. The speed of light must be c in both frames,

however as you can see the light has further to travel in the moving

clock. As 2D is longer than 2L, the moving clock appears to be running

more slowly from the frame of the stationary clock. Using these two

examples it is possible to derive the change in time and thus the time

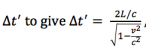

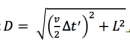

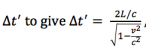

dilation. The first clock gives us the simple equation  and the moving clock shows

and the moving clock shows  . Using Pythagoras theorem we can show that

. Using Pythagoras theorem we can show that  , this can then be substituted back into the equation and rearranged for

, this can then be substituted back into the equation and rearranged for , finally giving .

, finally giving .

This expresses the fact that time slows down the faster you go. The

main point of this is that it is possible to travel a distance of one

light year in less than a year. This statement needs explaining however

because for a stationary onlooker, say a person on earth, the journey

time will appear to be much longer than a year. However the traveller

will have experienced less than a year. This is common at CERN where

short-lived particles, such as muons, with a mean lifetime of 2.2

microseconds, are accelerated which hugely extends their lifetime. As we

have no stationary point to measure the universe from, due to the

Earth’s constant movements, we will never have standard time. A clock on

Earth synchronized with one light years away on a distant planet will

not stay in sync for very long, the mass of the planet and its velocity

determine the experience of time. From here I can crudely argue that if

there will never be a standard time the one that matters the most is our

own. Having said that I have not proved that it would be faster than

light, this is a slightly unscientific argument, however it is

interesting to say that if there were no other people in the world to

offer an alternative view I would say that I can travel faster than

light.

My next example involves space-time distortion. After the Big Bang the universe expanded at a rate much faster than 3.00×108

m/s.Special relativity does not provide a limit for distorting

space-time. Miguel Alcubierre hypothesized that a spacecraft could be

enclosed in a ‘bubble’ and exotic matter could be used to rapidly expand

space-time at the back of the bubble, making you move further away from

objects behind you, and contacting it at the front, bringing object

ahead of you closer. This would be a new way of travel in which it would

be space-time which is moving rather than the spaceship. In this way

the ship would reach a destination much faster than a beam of light

travelling outside of the bubble but without anything travelling faster

than c inside the bubble.[6]This

method has one important drawback, It violates the weak, dominant and

strong energy conditions, both the weak and the dominant energy

conditions require the energy density to be positive for all observers,

therefore negative energy is needed, which may or may not exist.[7]

A particle which is always travelling faster than the speed of light

is known as a Tachyon. Tachyons, if they exist, would both answer my

question immediately and have very interesting properties. The equation  has often been used to show that particles with mass can never achieve

the speed of light, this is because it would require infinite energy.

However if the same equation was applied to tachyons, it would show two

things. Firstly, a tachyon would never be able to decelerate below the

speed of light, as crossing this limit from either side would require

infinite energy. Secondly it would have an imaginary mass. When v is

larger than c the denominator in the above equation would become

imaginary, as the total energy must be real the numerator must also be

imaginary. Therefore the rest mass must be imaginary, as an imaginary

number divided by another imaginary number is real.The

existence of tachyons would cause certain causality paradoxes. If they

could be used to send signals faster than c, then if one frame is moving

at 0.6c and another is moving at -0.6c there would always be one frame

in which the signal was received before it was sent. Effectively the

signal would have moved back in time. Special relativity claims the laws

of physics work the same in every frame, if it is possible for signals

to move back in one frame it must be for all of them. Therefore if A

sends a signal to B which moves faster than light in A’s frame and

therefore backwards in time in B’s frame. B could then reply with a

signal faster than light in B’s frame but backwards in time in A’s

frame, thus it could end up that A received the reply before sending the

original message, challenging causality and causing paradoxes.

has often been used to show that particles with mass can never achieve

the speed of light, this is because it would require infinite energy.

However if the same equation was applied to tachyons, it would show two

things. Firstly, a tachyon would never be able to decelerate below the

speed of light, as crossing this limit from either side would require

infinite energy. Secondly it would have an imaginary mass. When v is

larger than c the denominator in the above equation would become

imaginary, as the total energy must be real the numerator must also be

imaginary. Therefore the rest mass must be imaginary, as an imaginary

number divided by another imaginary number is real.The

existence of tachyons would cause certain causality paradoxes. If they

could be used to send signals faster than c, then if one frame is moving

at 0.6c and another is moving at -0.6c there would always be one frame

in which the signal was received before it was sent. Effectively the

signal would have moved back in time. Special relativity claims the laws

of physics work the same in every frame, if it is possible for signals

to move back in one frame it must be for all of them. Therefore if A

sends a signal to B which moves faster than light in A’s frame and

therefore backwards in time in B’s frame. B could then reply with a

signal faster than light in B’s frame but backwards in time in A’s

frame, thus it could end up that A received the reply before sending the

original message, challenging causality and causing paradoxes.

The mathematical case that prohibits faster than light travel uses the Equation E=MC2,

shows that energy and mass are the same thing, this equation implies

that the more energy you inject into a rocket, the more mass it gains,

and the more mass it gains, the harder it is to accelerate. Boosting it

to the speed of light is impossible because in the process the rocket

would become infinitely massive and would require and infinite amount of

energy.

A wormhole is effectively a shortcut through space-time. A wormhole

connects two places in space-time and allows a particle to travel a

distance faster than a beam of light would on the outside of the

wormhole. The particles inside the wormhole are not going faster than

the speed of light,they are only able to beat light because theyhave a

smaller distance to travel. Scientists imagine that the opening to a

wormhole would look something a bubble. It is theorized that a wormhole

allowing travel in both directions, known as a Lorentzian traversable

wormhole, would require exotic matter. As they connect two points in

both space and time they theoretically allow travel through time as well

as space. This fascinated many scientists and Morris, Thorne and

Yurtsever worked out how to convert a wormhole traversing space into one

traversing time. This process involves accelerating one opening of the wormhole relative

to the other, before bringing it back to the original location. This

uses a process I have mentioned earlier, time dilation. Time dilation

would cause the end of the wormhole that was accelerated to have aged

less. Say there were two clocks, one at each opening of the wormhole,

after this tampering, the clock on the accelerated end of the wormhole

may read 2000 where as the clock at the stationary end showed 2013, so

that a traveller entering one end would find himself/herself in the same

region but 13 years in the past. As fantastic as this may sound there

have been many who believe it would be impossible, there are predictions

that say that a loop of virtual particles would circulate through the

wormhole with ever-increasing intensity, destroying it before it could

be of any use.

Over the course of this essay I have shown a few ways in which the

speed of light can be ‘beaten’, but ultimately I have failed to produce

any proven faster than light particles, and for this reason I must

conclude that particles cannot travel faster than the speed of light.

However the topics I have raised are still in the developing stage, I

have shown that there are many diverse areas of physics which are both

being explored and need exploring. Many of these areas are still heavily

theoretical and are under further research, but our technology is

increasing at an exponential rate and the human race is steadily getting

smarter, so with time and perseverance we will know the answer to

questions such as these and many more. To infinity, and beyond.

Thanks Robert Karl Stonjek